“I would love to have one unified, federal law that effectively addresses AI safety. Congress has not passed such a law. Congress has not even come close to passing such a law,” said Democratic State Senator Scott Wiener of San Francisco, one of a growing number of California lawmakers rolling out legislation that could provide a model for other states to follow, if not the federal government. Wiener argues his Senate Bill 1047 is the most ambitious proposal so far in the country, and given that he was just named Senate Budget chair, he is arguably the best positioned at the state capitol to pass aggressive legislation that is also well-funded.

SB 1047 would require companies building the largest and most powerful AI models — not the wee startups — to test for safety before releasing those models to the public. What does that mean? Here’s some language from the legislation as currently written:

“If not properly subject to human controls, future development in artificial intelligence may also have the potential to be used to create novel threats to public safety and security, including by enabling the creation and the proliferation of weapons of mass destruction, such as biological, chemical, and nuclear weapons, as well as weapons with cyber-offensive capabilities.”

AI companies would have to tell the state about testing protocols and guardrails, and if the tech causes “critical harm,” California’s attorney general can sue. Wiener says his legislation draws heavily on the Biden administration’s 2023 executive order on AI.

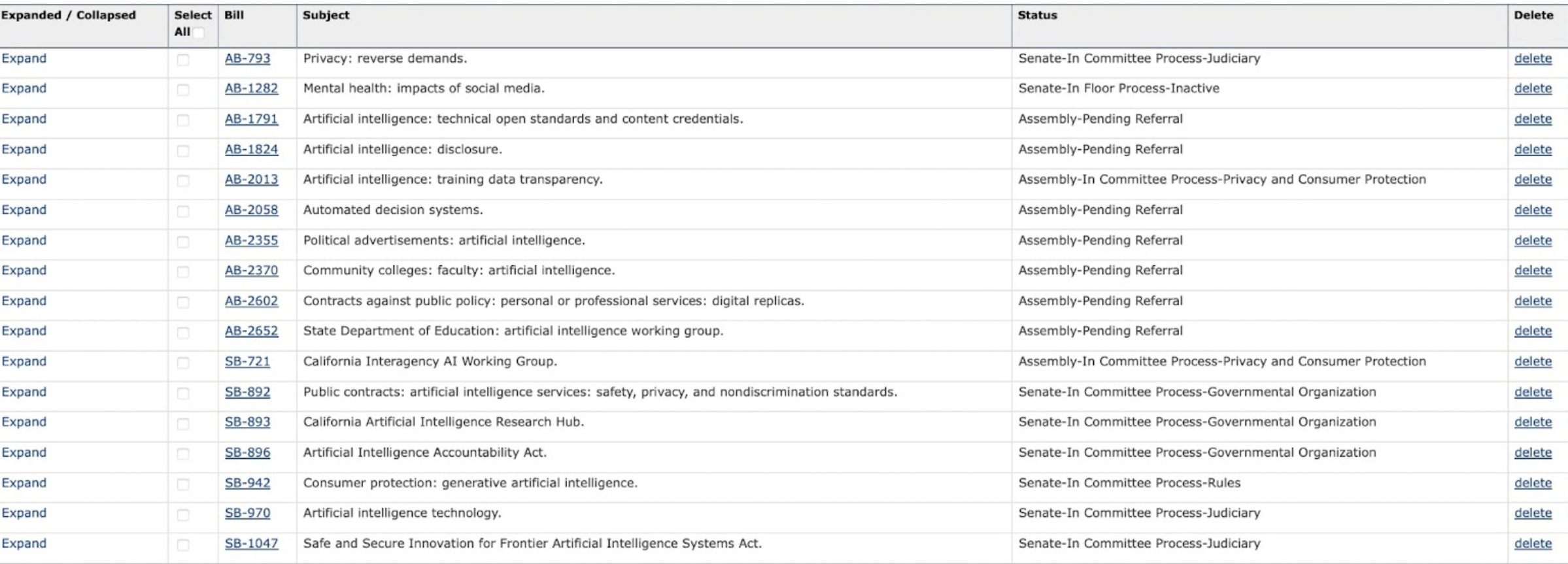

Catch up fast: By software industry alliance BSA’s count, there are more than 400 AI-related bills pending across 44 states, but California’s size and sophistication make the roughly 30 bills pending in Sacramento most likely to be seen as legal landmarks, should they pass. Also, many of the largest companies working on generative AI models are based in the San Francisco Bay Area. OpenAI is based in San Francisco; so are Anthropic, Databricks and Scale AI. Meta is based in Menlo Park. Google is based in Mountain View. Seattle-based Microsoft and Amazon have offices in the San Francisco Bay Area. According to the think tank Brookings, more than 60% of generative AI jobs posted in the year ending in July 2023 were clustered in just 10 metro areas in the U.S., led far and away by the Bay Area.

The context: The FTC and other regulators are exploring how to use existing laws to rein in AI developers and nefarious individuals and organizations using AI to break the law, but many experts say that’s not going to be enough. Lina Khan, who heads the Federal Trade Commission, raised this question during an FTC summit on AI last month: “Will a handful of dominant firms concentrate control over these key tools, locking us into a future of their choosing?”