“We really wanted to first start with the landscape, which is, what are adolescents doing? What platforms are they using, and what is the purpose to which they put them?” said Amanda Lenhart, head of research at Common Sense, which examines the impact of technology on young people. “Then we wanted to drill a little bit more specifically into educational uses. So how are they using it for school? How are teachers talking about it? How are schools talking about it with parents?”

This study did not talk to educators, but it sheds refracted light on several key educational questions through the experiences of parents and teenagers.

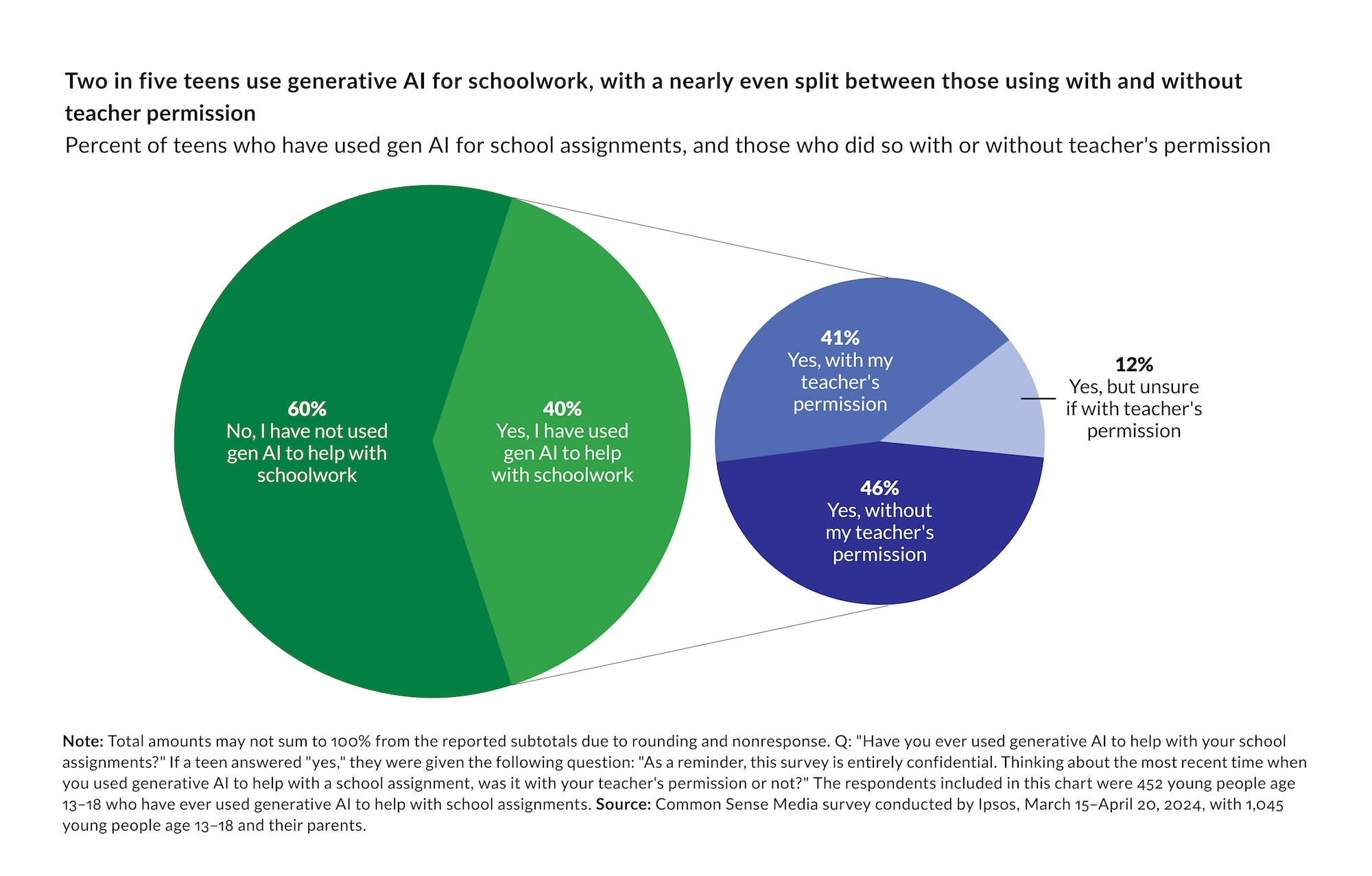

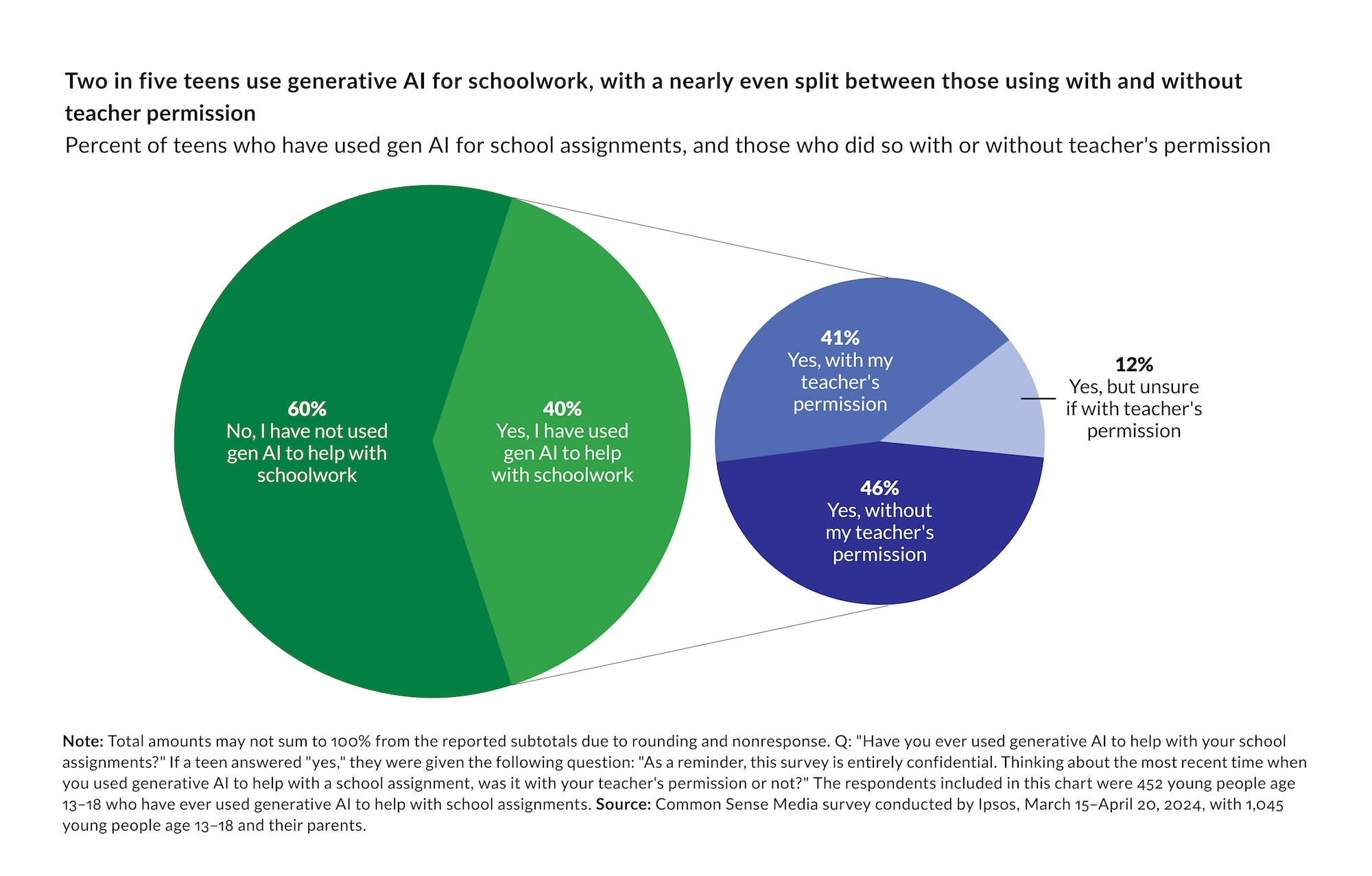

About 70% of teens have used at least one kind of AI tool. A little over half — 51% — have used chatbots or text generators like ChatGPT, Microsoft Copilot and Google’s Gemini, Common Sense found. More than half (53%) of students say they use AI for homework.

“They don’t have the guidance to know what it is they’re supposed to be doing,” Lenhart said. “Thirty-seven percent say they don’t even know if there are rules at their school. That leaves parents who are starting to walk into experimenting and using AI in their own lives really at sea about what their kids are doing.” She added that about a quarter of parents don’t think their kids are using AI, even though those very same children told Common Sense that they are.

“I’m super sympathetic to the challenges that teachers and administrators find themselves in. But we need to talk about this.” In response to the Common Sense Media study, teacher Melissa Donnelly-Gowdy, who is currently at River City High School in West Sacramento, wrote KQED that she may be referencing it during her English 11 rhetoric unit next term.

In response to the Common Sense Media study, teacher Melissa Donnelly-Gowdy, who is currently at River City High School in West Sacramento, wrote KQED that she may be referencing it during her English 11 rhetoric unit next term.

“I use AI everyday — mainly ChatGPT or Google Search AI in class with students and to prepare lesson plans, differentiate instruction, or get sample writing based on my assignments,” wrote Donnelly-Gowdy, who has been teaching for more than 15 years. “I want students to use AI because it’s not going away, but I also want them to grow in their integrity and critical thinking skills.”

Donnelly-Gowdy said earlier this week students could use ChatGPT if they wanted or needed to in a hybrid assignment for a Modern Barbie Doll Pitch. She said most of the students used AI, but that a few “more storyteller-inclined” students created their own stories. She explained to them this was one example of how AI could be used as a tool and a starting point for assignments.

Addressing concerns about AI being used to cheat, Donnelly-Gowdy said, “I have graded AI work and passed it through because I couldn’t find a way to prove it, but for the most part AI work is easy for me to identify. Students are going to use it to cheat, just like they have been using other things to cheat.”

In response to the Common Sense Media study, teacher Melissa Donnelly-Gowdy, who is currently at River City High School in West Sacramento, wrote KQED that she may be referencing it during her English 11 rhetoric unit next term.

In response to the Common Sense Media study, teacher Melissa Donnelly-Gowdy, who is currently at River City High School in West Sacramento, wrote KQED that she may be referencing it during her English 11 rhetoric unit next term.